A Test Scorer’s Lament

Illustrator: Stephen Kroninger

Scenes from the mad, mad, mad world of testing companies

Having made my living since 1994 scoring standardized tests, I have but one question about No Child Left Behind: When the legislation talks of “scientifically-based research” — a phrase mentioned 110 times in the law — they’re not talking about the work I do, right? Not test scoring, right? I hope not, because if the 15 years I just spent in the business taught me anything, it’s that the scoring of tens of thousands of student responses to standardized tests per year is played out in the theatre of the absurd.

I’m not talking about the scoring of multiple-choice standardized tests; they’re scored electronically, but it’s not as foolproof as you’d like to believe. No, I’m talking about open-ended items and essay prompts where students fill up the blank pages of a test booklet with their own thoughts and words.

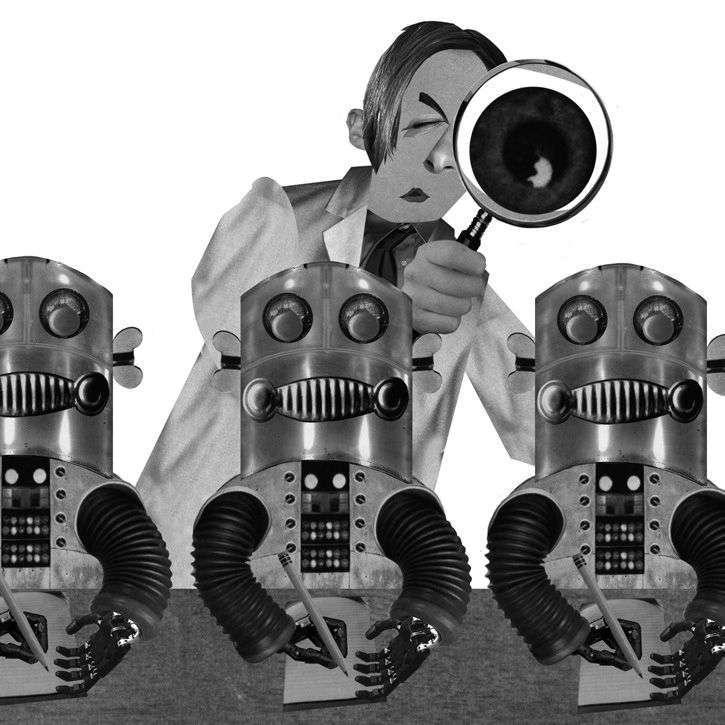

If your state has contracted that job out to one of the massive multinational, for-profit companies that largely make up the testing industry, you’ve surely been led to believe those student responses will be assessed as part of a systematic process as sophisticated as anything NASA could come up with. The testing companies probably told you all about the unassailable scoring rubrics they put together with the help of “national education experts” and “classroom teachers.” Perhaps they’ve told you how “qualified” and “well-trained” their “professional scorers” are. Certainly they’ve paraded around their omniscient “psychometricians,” those all-knowing statisticians with their inscrutable talk of “item response theory,” “T-values,” and the like. With all that grand jargon, it’s easy to assume that the job of scoring student responses involves bespectacled researchers in pristine, white lab coats meticulously reviewing each and every student response with scales, T-squares, microscopes, and magnifying glasses.

Well, think again.

If test scoring is “scientifically-based research” of any kind, then I’m Dr.

Frankenstein. In fact, my time in testing was characterized by overuse of temporary employees; scoring rules that were alternately ambiguous and bizarre; and testing companies so swamped with work and threatened with deadlines that getting any scores on tests appeared to be more important than the right scores. I’d say the process was laughable if not for the fact those test scores have become so important to the landscape of modern American education.

I offer the following true stories from my time in testing as a cautionary tale. Although they are random scenes, they are representative of my decade-and-a-half long experience in the business. Remember them when next you hear some particularly amazing or particularly depressing results from another almighty “standardized” test.

True Stories from the Field

On the second Monday of a two-week range-finding session, the project manager of a test-scoring company convenes the meeting in a large conference room. The participants are esteemed experts in writing and education from around the United States who have been invited to this leafy campus to share their expertise, but the project manager has to make an announcement they may not like. “I have some bad news,” she says.

“It seems we haven’t been scoring correctly.”

The room buzzes, with both a disappointment that such an assembly of experts might have erred and disbelief that anyone would doubt them.

“Says who?” a bespectacled fellow asks.

“Well,” the project manager says, “our numbers don’t match up with what the psychometricians predicted.”

Grumblings fill the room, but one young man new to the scoring process looks confused.

“The who?” he asks.

“The psychometricians,” the project manager says. “The stats people.”

“They read the essays this weekend?” the young man asks.

“Uh, no,” the project manager laughs.

“They read their spreadsheets.”

“So?” the young man says, looking perplexed.

The project manager explains. “The psychometricians predicted between 6 and 9 percent of the student essays would get 1’s on this test, but from our work last week we’re only seeing 3 percent. The rest of the numbers are pretty much on, but we’re high on 2’s and short on 1’s.”

The young man stares at the project manager. “The psychometricians know what the scores will be without reading the essays?”

The project manager looks down. “I guess that’s one way to look at it.”

After three days of contentious and confusing training sessions, 100 temporarily employed professional scorers begin assessing high school essays. One scorer leans toward her neighbor and hands him the essay she’s been reading. “Al,” she whispers, “what score would you give this paper?”

The man smiles. “Let me see, Tammy,” he says, taking the proffered essay and replacing it with the one in his hand. “What would you give this?”

For about a minute, Al and Tammy each scan the other’s essay.

“What do you think?” Tammy asks.

“I dunno. Yours seems like a 4.”

“A 4?”

“Yup. Seems ‘adequate’. What do you think?”

Tammy shrugs. “I was thinking 3. It doesn’t say much.”

Al shrugs. “Maybe you’re right…”

“Maybe you’re right…”

“What would you give mine?” Al asks.

“I think a 5.”

“A 5?” Al questions. “I thought it was a 4.”

“Yours has all that development and details…”

“But the grammar…”

“I liked yours better than mine,” Tammy says.

“And I liked yours better than mine.”

The scorers exchange essays again. Tammy smiles, patting Al’s arm. “Real glad we had this talk, Al,” she says. “It’s been a great help.” Then Tammy laughs.

The project manager of a test-scoring company addresses an employee. “Bill,” the project manager says, “I need you in cubicle No. 3 tomorrow to retrain item No. 7 of the science block. Harold’s team screwed it up.”

“No problem,” Bill says.

“Good,” the project manager nods. He looks over a list in his hand. “For that retraining, do you want scorers that are slow and accurate, or ones that are fast and, uh, not so accurate?” He makes air quotes around the final three words.

Bill briefly ponders that question. “I take it you don’t have any scorers that are both fast and accurate?” he asks.

The project manager doesn’t even look up from the list. “Please…”

In the hot sun, at a table beside a hotel pool, two men and a woman drink icy cocktails to celebrate the successful completion of a four-week scoring project. The hotel manager brings a phone to their table. “Call for you,” he says to the woman, whose face blanches when the home office tells her that another dozen tests have been found.

“I know,” the woman says, “someone has to score them. OK, read them to me over the phone.” The woman turns the phone’s speaker on, and she and the men listen to student responses read by a squeaky-voiced secretary several thousand miles away. One of the men waves to the bartender to bring another round, and with drinks but not rubrics in their hands the two men and one woman score each student response via the telephone. When the voice on the phone goes silent after reading each response, the woman looks at the number of fingers the men hold in the air.

“Three,” the woman says into the phone. “That’s a three.”

This goes on for an hour and two rounds of drinks.

A project manager for a test-scoring company addresses the supervisors hired to manage the scoring of a project. The project is not producing the results expected, to the dismay of the test-scoring company and its client, a state department of education. The project manager has been trying to calm the concerned employees, but she’s losing patience. She’s obviously had enough.

“I don’t care if the scores are right,” the project manager snarls. “They want lower scores, and we’ll give them lower scores.”